本文主要分析下flink源码中flink-examples-streaming模块,为了方便分析,会把代码都拷贝在文中。

wordcount

实时统计单词数量,每来一个计算一次并输出一次。

1 | public class WordCount { |

输出的结果:

1 | 5> (miao,1) |

socket

监听socket端口输入的单词,进行单词统计。

1 | public class SocketWindowWordCount { |

本机启用监听端口:

1 | nc -l 9999 |

socket监听端口输入以下内容:

1 | miao she is a programmer |

输出的结果:

1 | she : 2 |

async

主要通过以下示例了解下AsyncFunction作用到DataStream上的使用方法。

1 | public class AsyncIOExample { |

iteration

本示例为输入int值键值对,迭代计算斐波那契数列值大于100时需要计算的步长。

斐波那契数列:

F(n) = F(n-1) + F(n-2)

假设初始键值对为(34,11),则产生的斐波那契数列为:34,11,45,56,101

经过本代码运行的结果应该是((34,11),3),需要经历3步长,即累加3次才能使得数列值101 > 100,才能打到output流中

1 | public class IterateExample { |

输出的部分结果:

1 | 6> ((43,24),3) |

join

本示例演示了如何使用DataStream API进行双流join。

1 | public class WindowJoin { |

sideoutput

当想要拆分数据流时,通常需要复制流,使用旁路输出可以直接过滤出不要的数据。示例中过滤出了长度 > 5的单词

1 | public class SideOutputExample { |

输出的结果:

1 | 6> rejected: question |

windowing

session window

示例演示了基于EventTime的会话窗口,分析了watermark生成以及触发窗口计算的时机。对于将被窗口丢弃的数据,如(“a”,1L,2),可以sideoutput。

1 | public class SessionWindowing { |

输出的结果:

1 | (a,1,1) |

在代码中添加一些日志打印,watermark生成与触发计算详情如下:

1 | Advanced watermark 0 |

Flink何时触发window ?

1 | 1. watermark > window_end_time (对于out-of-order以及正常的数据而言) |

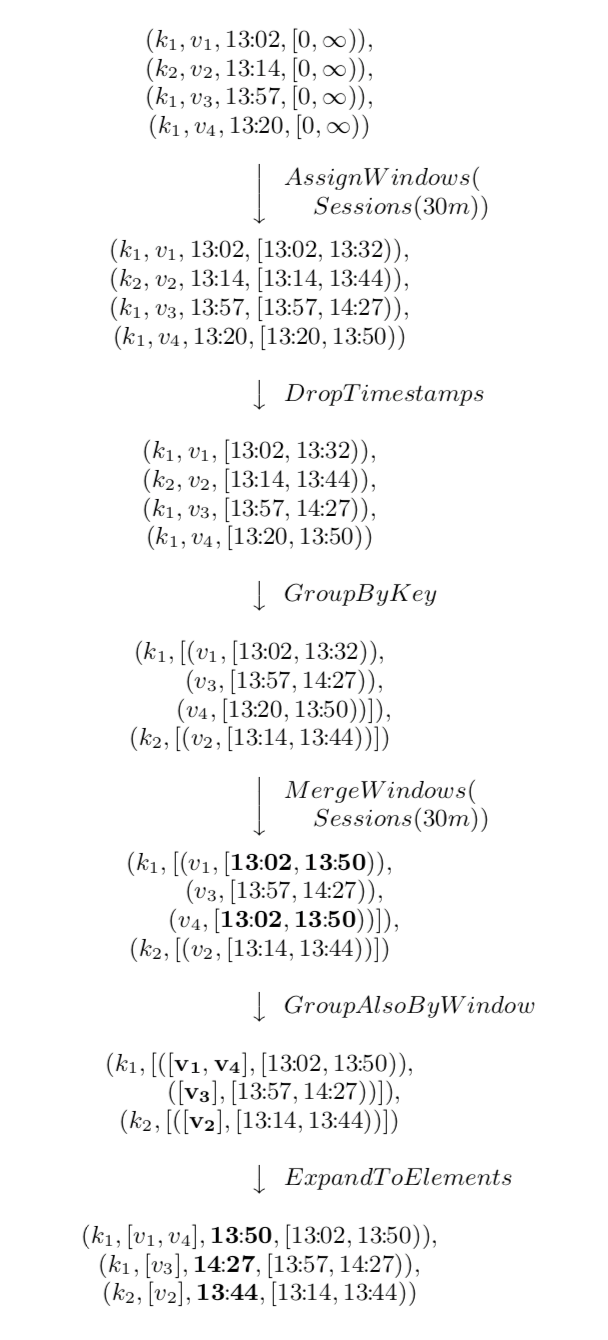

窗口合并过程示例:

count window

- slide count window

1 | public class SlideCountWindowExample { |

输出的结果:

1 | (a,12) |

每进来2个元素,就对最近的3个元素计算一遍

查看内部源码:

1 | public WindowedStream<T, KEY, GlobalWindow> countWindow(long size, long slide) { |

- tumble count window

1 | public class TumbleCountWindowExample { |

输出的结果:

1 | (a,123) |

最后一条 Tuple2.of(“b”, “0”) 被丢弃,因为最后一条数据已经无法触发计算了