Apache Flink 从 1.9.0 版本开始增加了与Hive集成的功能,用户可以通过Flink来访问Hive的元数据,以及读写Hive中的表。

新增功能

元数据

原先Flink提供的 ExternalCatalog 定义非常不完整,基本不可用。提出了一套全新的 Catalog 接口来取代 ExternalCatalog 。新的 Catalog能够支持数据库、表、分区等多种元数据对象。

允许一个用户session中维护多个 Catalog 实例,从而访问多个外部系统。并且 Catalog 以可插拔的方式接入Flink,允许用户提供自定义实现。

表数据

新增了 flink-connector-hive 模块

Flink SQL Client中使用Hive

安装flink-1.9.0

安装hive-2.3.4

在

/usr/local/flink-1.9.0/lib下添加依赖包:

1 | antlr-runtime-3.5.2.jar |

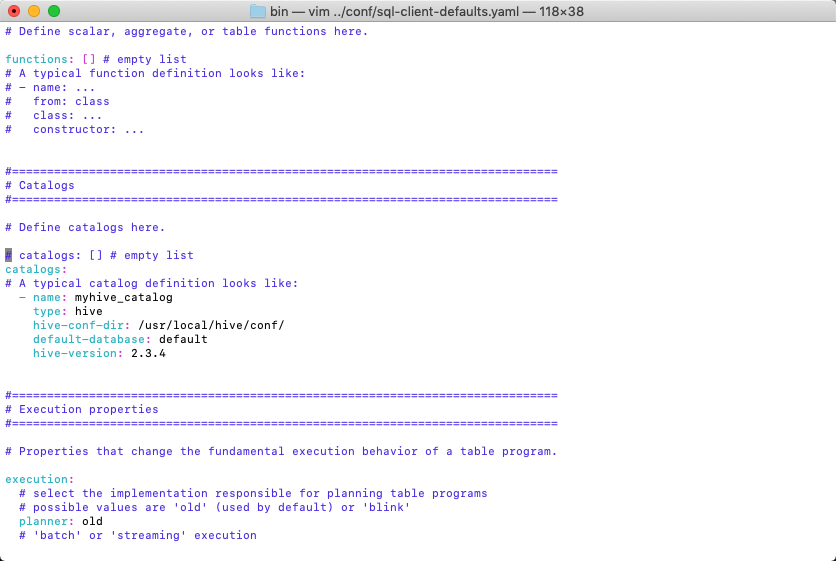

- 修改sql-client的配置文件

/usr/local/flink-1.9.0/conf/sql-client-defaults.yaml:

1 | # catalogs: [] # empty list |

- 启动Flink集群:

1 | ./start-cluster.sh |

- 启动SQL Client:

1 | ./sql-client.sh embedded |

- 列举所有的catalog:

1 | Flink SQL> show catalogs; |

- 使用catalog、database等:

1 | Flink SQL> use catalog myhive_catalog; |

查看表结构:

1 | Flink SQL> describe employee; |

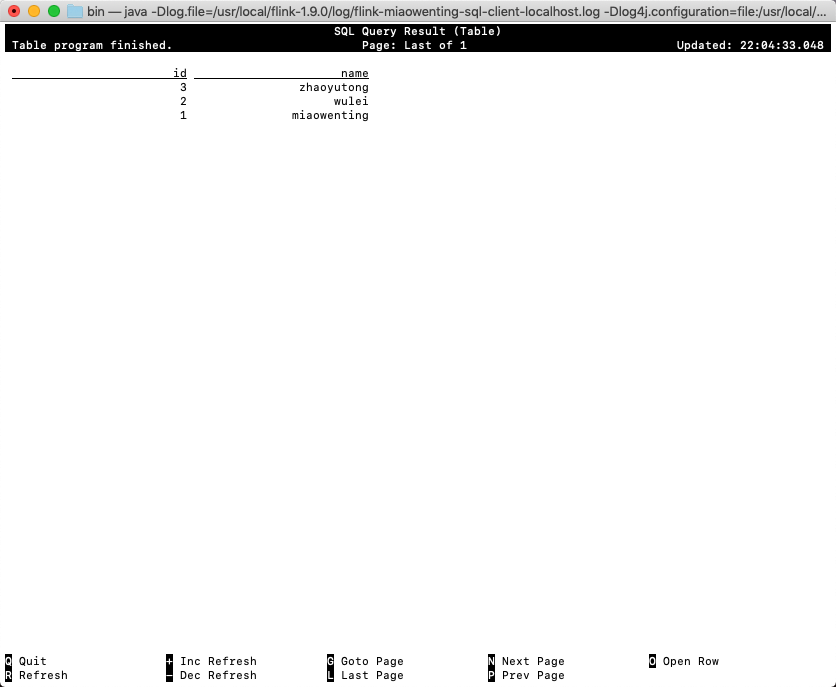

查询表数据:

1 | Flink SQL> select * from employee; |

插入表数据有问题,待解决:

1 | Flink SQL> insert into employee(id,name) values (4,'test'); |

Table API中使用Hive

Table API创建HiveCatalog

1 | String name = "myhive_catalog"; |

Table API读写Hive表

1 | TableEnvironment tableEnv = ...; |