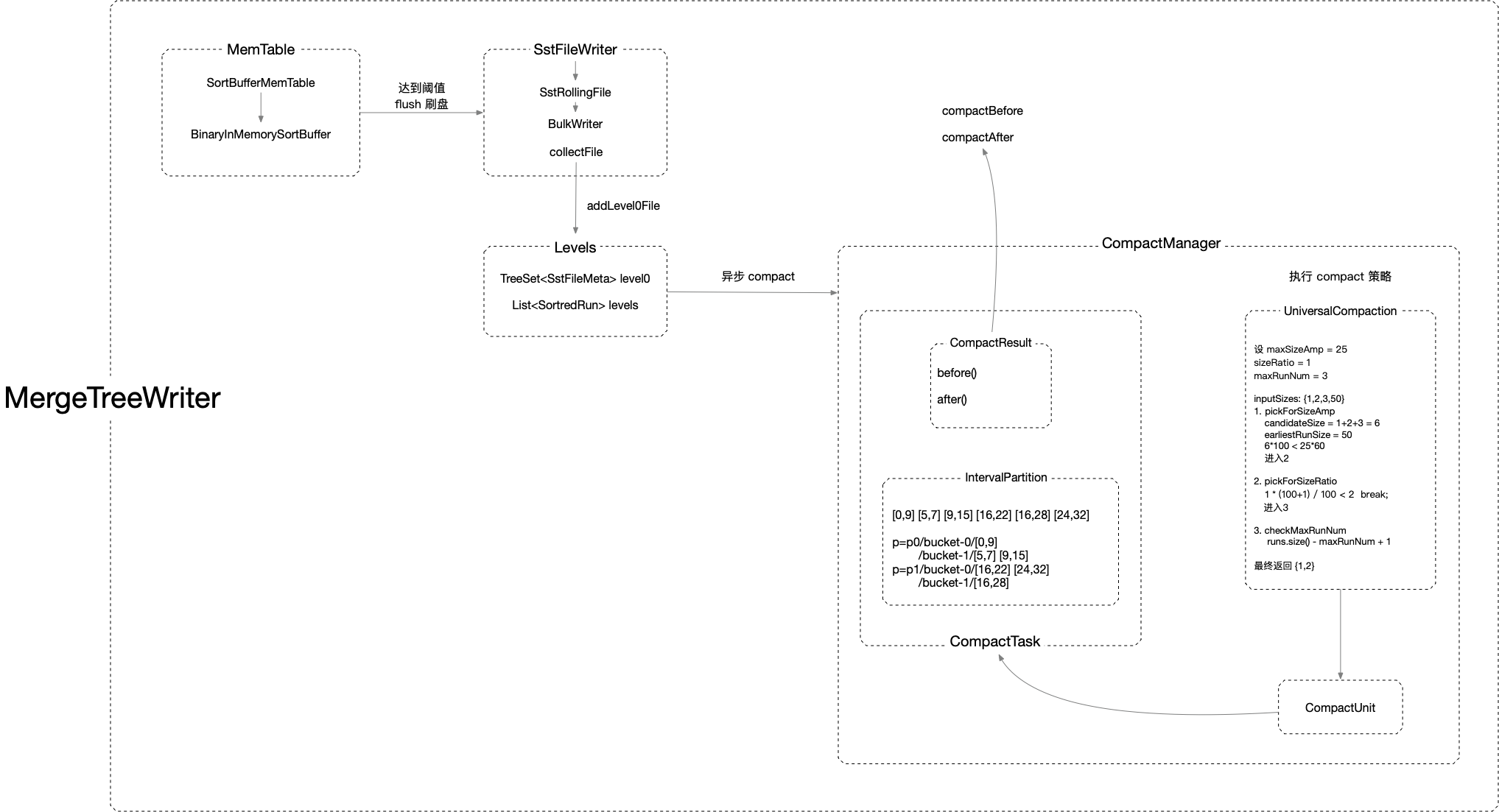

本文了解下 MergeTreeWriter 的源码实现。

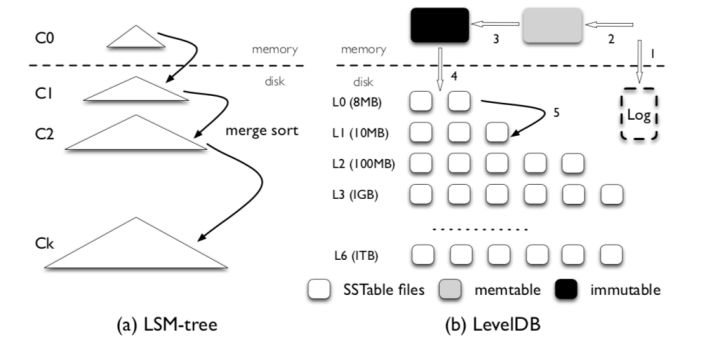

leveldb

MergeTreeWriter

测试用例

SstFileTest

1 | /** Tests for {@link SstFileReader} and {@link SstFileWriter}. */ |

1 | /** Random {@link SstFileMeta} generator. */ |

1 | /** Random {@link KeyValue} generator. */ |

gen 一直产生数据,key 为 (shopId, orderId):

1 | sequenceNumber -> 0,key-> (6,-5707870044488831310), partition -> 202111118,bucket -> 0,op -> ADD, value -> 2780980699221738542 |

partition=’2021111109’, bucket=0 的 memTableCapacity 首批达到阈值 20,数据如下:

1 | sequenceNumber -> 6,key-> (6,5937024096732189195), partition -> 202111109,bucket -> 0,op -> ADD, value -> 8884526624823745720 |

将 memTable 中的数据按 key,sequenceNumber 排序,数据如下:

1 | sequenceNumber -> 43,key-> (0,-6226701972071386686), partition -> 202111109,bucket -> 0,op -> ADD, value -> 0 |

再按 key 聚合去重,数据如下:

1 | sequenceNumber -> 43,key-> (0,-6226701972071386686), partition -> 202111109,bucket -> 0,op -> ADD, value -> 0 |

ManifestFileTest

1 | /** Tests for {@link ManifestFile}. */ |

1 | /** Random {@link ManifestEntry} generator. */ |

sst files:

1 | sst file: partition -> 202111119,bucket -> 1,level -> 1,sst file name -> sst-40dab8b8-a7c0-4707-9743-b3a91c2d12f7 |

manifestEntry:

1 | manifestEntry: valueKind -> ADD,partition -> 202111119,bucket -> 1,level -> 1,sst file name -> sst-40dab8b8-a7c0-4707-9743-b3a91c2d12f7 |

ManifestListTest

1 | /** Tests for {@link ManifestList}. */ |

IntervalPartitionTest

1 | /** Tests for {@link IntervalPartition}. */ |

1 | /** Algorithm to partition several sst files into the minimum number of {@link SortedRun}s. */ |

UniversalCompactionTest

- testSizeAmplification

1 | /** Test for {@link UniversalCompaction}. */ |

- testSizeRatio

1 | /** Test for {@link UniversalCompaction}. */ |

MergeTreeTest

1 | /** Tests for {@link MergeTreeReader} and {@link MergeTreeWriter}. */ |

测试用例产生200条一批数据,到 sequenceNumber = 186 时,写 memTable 失败执行第一次 flush :

1 | sequenceNumber -> 0, ValueKind -> ADD, key -> +I(79), value -> +I(-1282339797) |

经过 QuickSort.sort(buffer) 排序如下,按照 sequenceNumber, key 排序:

1 | sequenceNumber -> 97, ValueKind -> ADD, key -> +I(0), value -> +I(964697602) |

MergeTreeWriter.flush() 操作是从 memTable.iterator() 迭代读取数据,通过 SstFileWriter.write() 写入 bucket-0/sst-* 文件 。

关键实现

MergeTreeWriter

1 | /** A {@link RecordWriter} to write records and generate {@link Increment}. */ |

SortBufferMemTable

1 | /** A {@link MemTable} which stores records in {@link BinaryInMemorySortBuffer}. */ |

CompactManager

1 | /** Manager to submit compaction task. */ |

参考

lsm-trie git

LSM-tire_An_LSM-tree-based_Ultra-Large_Key-Value_Store_for_Small_Data.pdf

Skip_Lists_A_Probabilistic_Alternative_to_Balanced_Trees.pdf

The_Log-Structured_Merge-Tree.pdf

WiscKey_Separating_Keys_from_Values_in_SSD-conscious_Storage.pdf

浅析 LSM-tree

Designing Data-Intensive Applications 分享视频

《精通LevelDB》 2022年1月出版